Moral Models for AI Safety

Description

In this project, we develop a methodology for the identification, formalisation and implementation of the moral values and goals relevant in various high-risk contexts. Based on this methodology, it is our hope AI systems can be equipped with adaptive moral models that represent human moral standards.

Problem Context

In the flagship project MMAIS, we focus on the development of AI systems that align with human moral values . Especially in high-risk domains, it is often crucial to carefully consider and weigh relevant ethical aspects in the decision-making process in order to minimize potential harm (e.g., social, economic, or physical harm). While humans are generally quite skilled at implicit moral evaluation of situations, AI systems are currently incapable of doing so without some explicit representation of morality. There is a risk that AI systems produce behaviours (i.e., gives advice or performs actions) that do not align with human moral stands if we cannot identify, formalize and implement the moral standards that should be adhered to in an operational context. In many applications of AI, this requires AI systems to have some intrinsic notion of the relevant moral values. With such a notion, it also becomes possible for such AI systems to explain and reflect on the ethics of their (harmful) behaviour for us to understand how to adjust and control them effectively.

The need to identify, formalize and implement moral standards in the design and use of our AI systems is apparent. However, currently the complex field of developing appropriate methods to do this is stagnating. One reason for this stagnation are the various perspectives that each advocate for their own singular solution. These are the legal (i.e., who to blame?), ethical (i.e., how to ensure law-abidance?), philosophical (i.e., who to kill?), and technical (i.e., how to learn morality?) perspectives. The experts of each perspective dictate to the others what their role and responsibility is in designing, developing and using AI systems. At the same time there are profound disagreements between them. This creates an impasse in the research field that needs to be overcome.

Solution

TNO is in the unique opportunity to overcome this impasse by evaluating and improving upon the methods from each perspective and capture them in a single methodology. This methodology will dictacte (for the different kinds of AI systems) how to design, develop, and use it so its behaviour will adhere to human moral standards, and, in case it does not show morally aligned behaviour, how to adjust or control that behaviour so it will become aligned. The methodology aims to result in the identification, formalisation and implementation of the relevant moral values and goals that should dictate the AI system’s behaviour. We believe that this methodology could result in AI systems that are equipped with an adaptive moral model. This model represents the moral values and goals to which the system should comply in a high-risk operational context, enhanced by the appropriate human-AI interaction that allows for the evaluation and adjustment of the moral model over time. In MMAIS, we will research the required methods to obtain such an adaptive moral model and the appropriate human-AI interaction.

Results

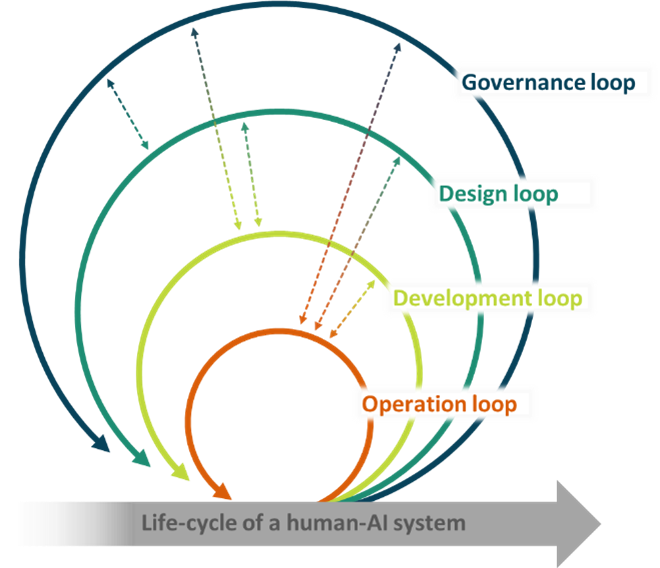

In 2022 and 2023, we developed the SOTEF methodology for the governance, design, development, and use of AI systems. This methodology operates on the basis of a formal moral model with clearly defined moral values. The SOTEF loop focuses on facilitating the process of eliciting, specifying, and implementing moral values in an iterative and inclusive manner, and on monitoring value alignment. The result of this process at each iteration is an improved moral model that allows an AI system to behave acceptably in a constrained and predefined context. The SOTEF loop involves different stakeholders (engineers, legislators, authorities, and end-users) in an iterative process of auditing and adapting the moral model for AI systems in order to control system behaviour. This socio-technological feedback loop enables the required adaptivity of high-risk AI systems in a changing world.

Additionally, we performed a first research iteration of the SOTEF loop and its methodology in 2023, within the context of autonomous vehicles. We developed various methods and techniques for eliciting, specifying, and implementing moral values in the design and development loops, and for monitoring value alignment in the operational loop. For 2024, we will shift to the domain of preventive healthcare to investigate the design, development and alignment of a clinical decision support system. We will build upon the methods and techniques developed in 2023.

Contact

- Tjeerd Schoonderwoerd, Research Scientist Innovator AI, TNO, e-mail: tjeerd.schoonderwoerd@tno.nl